29 Mar 2024 - 15 min read

Fargate meets Terraform

Dennis Winnepenninckx

29 Mar 2024 - 15 min read

Creating CI/CD pipelines to automatically push Docker images to AWS ECR can be a challenge, despite plenty of documentation being available.

The last flow I created required specific technologies to be used, mainly Terraform and AWS Fargate. When working on this task, I hungered for a more step-by-step guide. That's where this blog comes into play!

This post aims to simplify the process by offering a to-the-point guide to setting up Fargate infrastructure using Terraform.

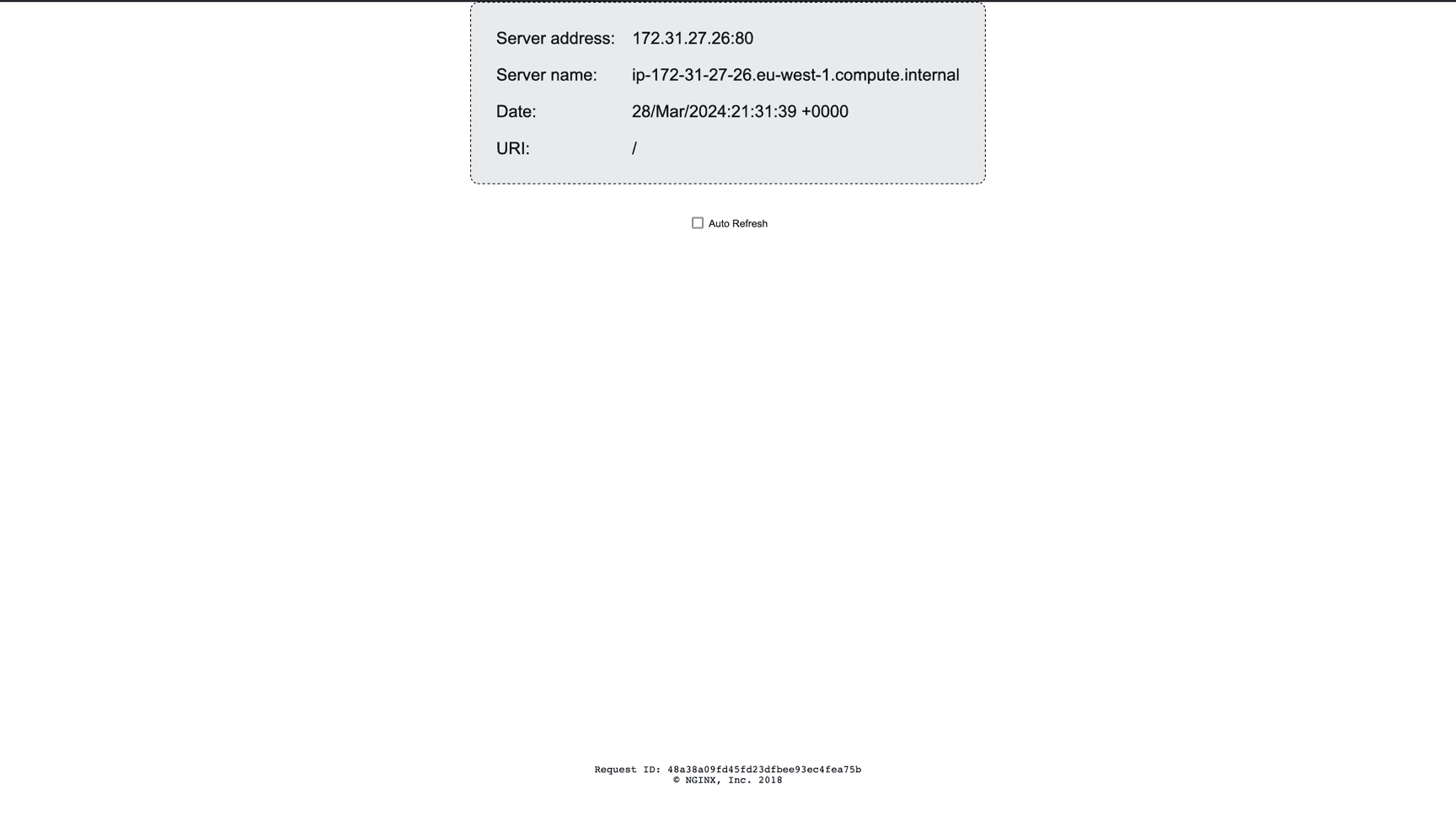

At the end of the guide (or by simply cloning the repositories and running them) you will have an ALB connected to a Fargate container running a default NGNIX page.

Prerequisites

First, some context! In this blog we will talk about 2 repositories: 'Infra' and 'Client'. The Client application is a small NGNIX demo that will host a default page. Infra will be responsible for creating all our AWS resources such as ALB, ECR, Fargate tasks,… Here you can find the code that I'm using for the Client and Infra projects.

The guide also assumes you have access to an AWS account and a Gitlab environment with configured runners.

Infrastructure

As explained above, the purpose of the infrastructure repository is to quickly set up and destroy our AWS resources. This means we can easily deploy our application on different AWS accounts simply by altering our credentials.

For more information about the code and how to run it, you can check out the repository's readme.

ALB

Before we get started on the Fargate setup we need an ALB so we can eventually connect to our containers.

// When you create a Fargate task you need to link an ALB to access the container.

// In this example we will create an ALB first

resource "aws_security_group" "ftb_client_alb_sg" {

name = "ftb-alb-client-sg"

description = "Allow incoming http traffic"

vpc_id = var.vpc_id

ingress {

from_port = 80

to_port = 80

protocol = "TCP"

cidr_blocks = ["0.0.0.0/0"]

ipv6_cidr_blocks = ["::/0"]

}

egress {

from_port = 80

to_port = 80

protocol = "TCP"

cidr_blocks = ["0.0.0.0/0"]

ipv6_cidr_blocks = ["::/0"]

}

}

resource "aws_lb" "client_alb" {

name = "ftb-alb-client"

load_balancer_type = "application"

security_groups = [

aws_security_group.ftb_client_alb_sg.id

]

subnets = split(",", var.subnet_ids)

}

// Health check endpoint should be defined for your specific application

// Instances will go unhealthy when the healthcheck fails

resource "aws_lb_target_group" "alb_client_target_group" {

name = "ftb-alb-client-tg"

port = 80

protocol = "HTTP"

target_type = "ip"

vpc_id = var.vpc_id

health_check {

enabled = true

interval = 30

path = "/"

port = 80

protocol = "HTTP"

healthy_threshold = 3

unhealthy_threshold = 3

timeout = 6

matcher = "200,307"

}

}

resource "aws_lb_listener" "alb_client_listener_http" {

load_balancer_arn = aws_lb.client_alb.arn

port = 80

protocol = "HTTP"

default_action {

type = "forward"

target_group_arn = aws_lb_target_group.alb_client_target_group.arn

}

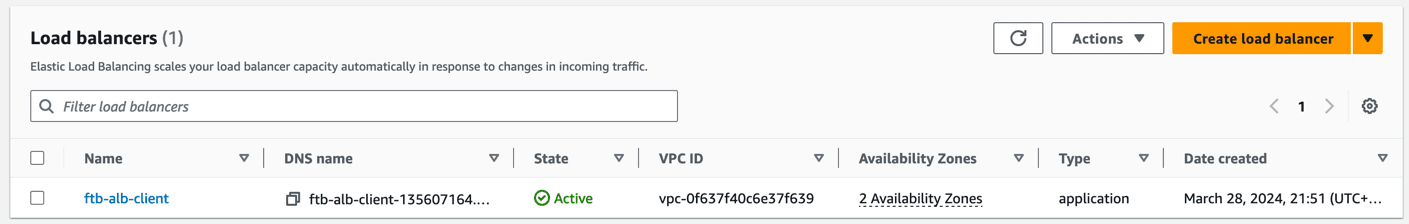

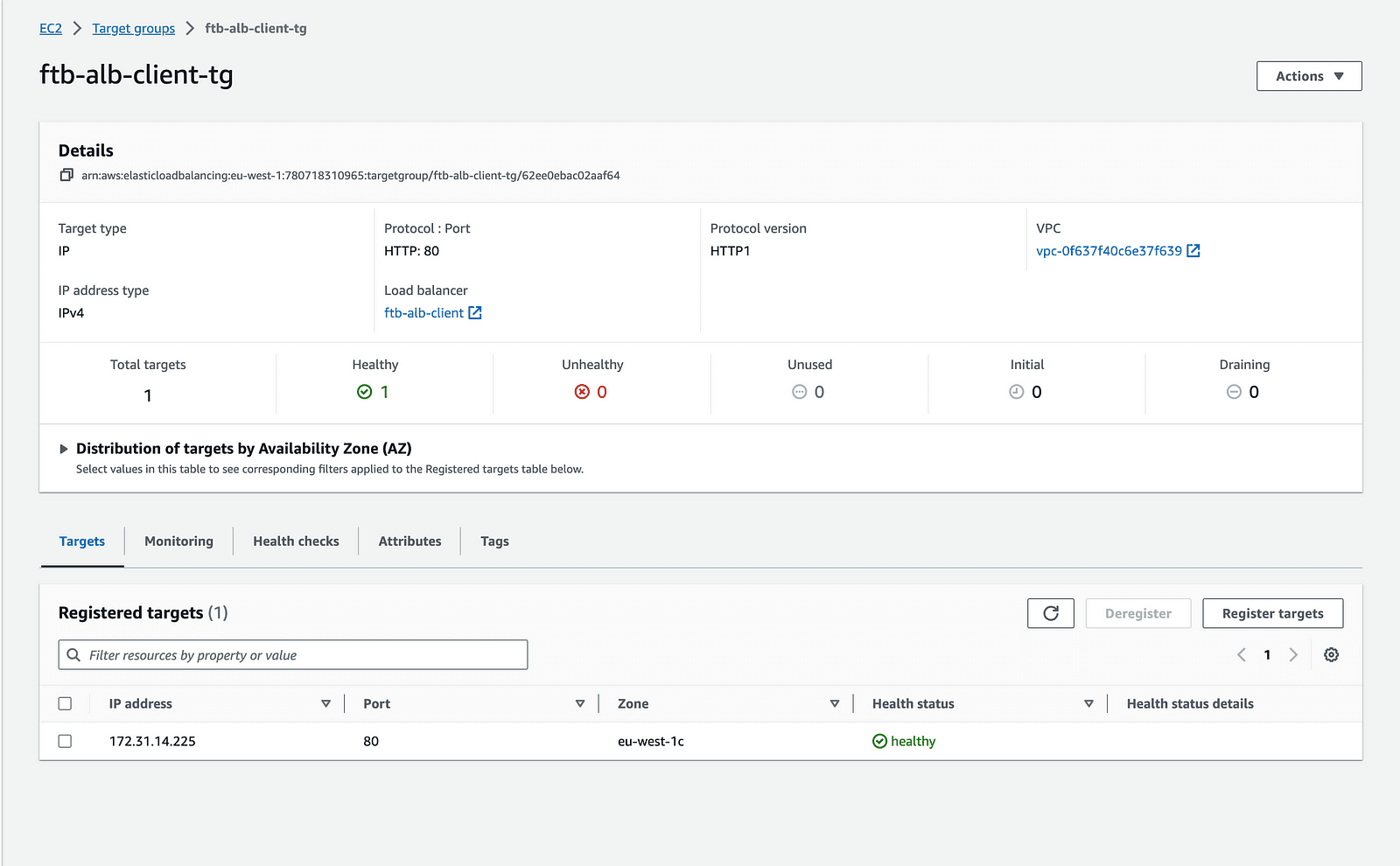

}This should leave you with the following setup in AWS when visiting the EC2 - Load balancers page:

Creating the ALB leaves us with a DNS name we can use to access our upcoming Fargate containers.

VPC Endpoints

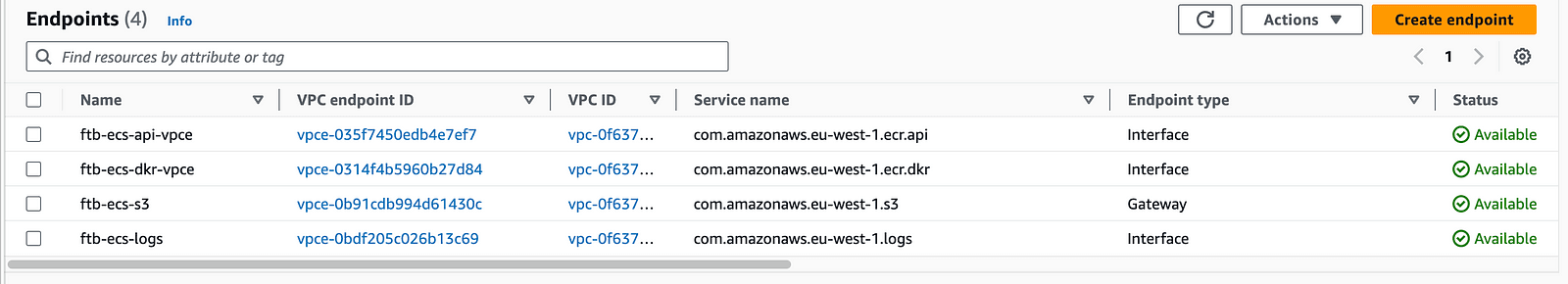

This step is only required if you deploy your ECS service in a private subnet.

If you do this, AWS needs some help routing traffic between ECS and ECR.

ECR needs access to S3 to fetch image layers and if you want any logging to Cloudwatch you need yet another endpoint.

In Terraform this is done by executing the following:

// ECS service needs to be able to communicate with ECR service.

// For this we create VPC Endpoints

// This is only necessary if you deploy ECS in a private subnet

resource "aws_security_group" "ecs_vpce_service_sg" {

name = "ftb-ecs-vpce-sg"

description = "Allow incoming http/HTTPS traffic"

vpc_id = var.vpc_id

ingress {

from_port = 80

to_port = 80

protocol = "TCP"

cidr_blocks = ["0.0.0.0/0"]

ipv6_cidr_blocks = ["::/0"]

}

ingress {

from_port = 443

to_port = 443

protocol = "TCP"

cidr_blocks = ["0.0.0.0/0"]

ipv6_cidr_blocks = ["::/0"]

}

egress {

from_port = 80

to_port = 80

protocol = "TCP"

cidr_blocks = ["0.0.0.0/0"]

ipv6_cidr_blocks = ["::/0"]

}

egress {

from_port = 443

to_port = 443

protocol = "TCP"

cidr_blocks = ["0.0.0.0/0"]

ipv6_cidr_blocks = ["::/0"]

}

}

resource "aws_vpc_endpoint" "s3_api_vpce_gw" {

vpc_id = var.vpc_id

service_name = "com.amazonaws.eu-west-1.s3"

vpc_endpoint_type = "Gateway"

tags = {

"Name" : "ftb-ecs-api-s3-gw"

}

}

// Here we link the default routing table to the VPC GW Endpoint.

// This makes sure that our network can route traffic from ECR to S3

resource "aws_vpc_endpoint_route_table_association" "example" {

vpc_endpoint_id = aws_vpc_endpoint.s3_api_vpce_gw.id

route_table_id = var.routing_table_id

}

resource "aws_vpc_endpoint" "ecr_dkr_vpce" {

vpc_id = var.vpc_id

service_name = "com.amazonaws.eu-west-1.ecr.dkr"

vpc_endpoint_type = "Interface"

security_group_ids = [

aws_security_group.ecs_vpce_service_sg.id

]

subnet_ids = split(",", var.subnet_ids)

tags = {

"Name" : "ftb-ecs-dkr-vpce"

}

private_dns_enabled = true

}

// ECR stores image layers in S3

resource "aws_vpc_endpoint" "ecr_s3_vpce" {

vpc_id = var.vpc_id

service_name = "com.amazonaws.eu-west-1.s3"

vpc_endpoint_type = "Gateway"

tags = {

"Name" : "ftb-ecs-s3"

}

}

resource "aws_vpc_endpoint" "ecr_logs_vpce" {

vpc_id = var.vpc_id

service_name = "com.amazonaws.eu-west-1.logs"

vpc_endpoint_type = "Interface"

security_group_ids = [

aws_security_group.ecs_vpce_service_sg.id

]

subnet_ids = split(",", var.subnet_ids)

private_dns_enabled = true

tags = {

"Name" : "ftb-ecs-logs"

}

}

resource "aws_vpc_endpoint" "ecr_api_vpce" {

vpc_id = var.vpc_id

service_name = "com.amazonaws.eu-west-1.ecr.api"

vpc_endpoint_type = "Interface"

security_group_ids = [

aws_security_group.ecs_vpce_service_sg.id

]

subnet_ids = split(",", var.subnet_ids)

tags = {

"Name" : "ftb-ecs-api-vpce"

}

private_dns_enabled = true

}

This is where I had the most trouble when deploying my infrastructure! Not having these VPC endpoints or having them but with no routing table assigned to the VPC GW Endpoint causes a lot of debugging with hidden error messages in ECS!

The above code will result in 4 endpoints being created when you visit VPC - Endpoints:

Elastic Container Registry

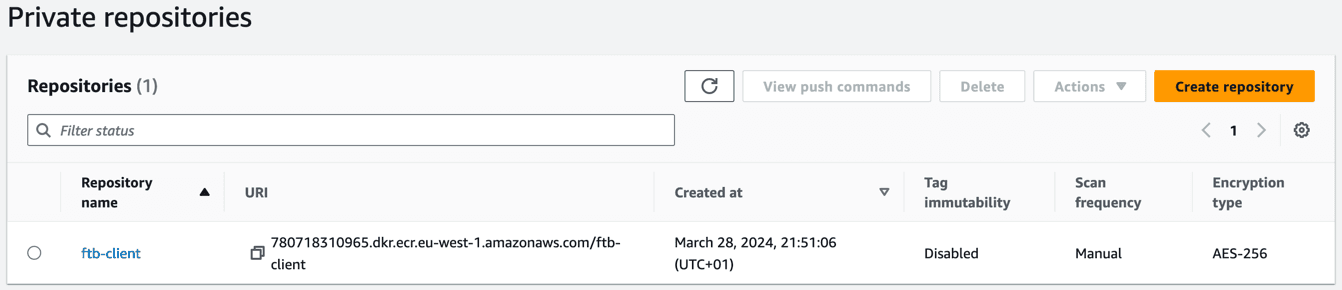

Later in this guide we will make a Client application which will be created from a simple Dockerfile that will show the default NGINX page. The image that we make from this Dockerfile needs to live somewhere. That's where AWS ECR steps in as our image registry.

The following Terraform code will make an ECR repository that only keeps the last 5 pushed images so we don't clutter the ECR with old images.

// Start making the ECR

// Create ECR repository where Client docker file will be uploaded

resource "aws_ecr_repository" "client_ecr_repository" {

name = "ftb-client"

}

// Only keep the 5 latest images in our ECR repository

resource "aws_ecr_lifecycle_policy" "ecr_lifecycle_policy" {

repository = aws_ecr_repository.client_ecr_repository.name

policy = <<EOF

{

"rules": [

{

"rulePriority": 1,

"description": "Keep last 5 images",

"selection": {

"tagStatus": "tagged",

"tagPrefixList": ["ftb"],

"countType": "imageCountMoreThan",

"countNumber": 5

},

"action": {

"type": "expire"

}

}

]

}

EOF

}

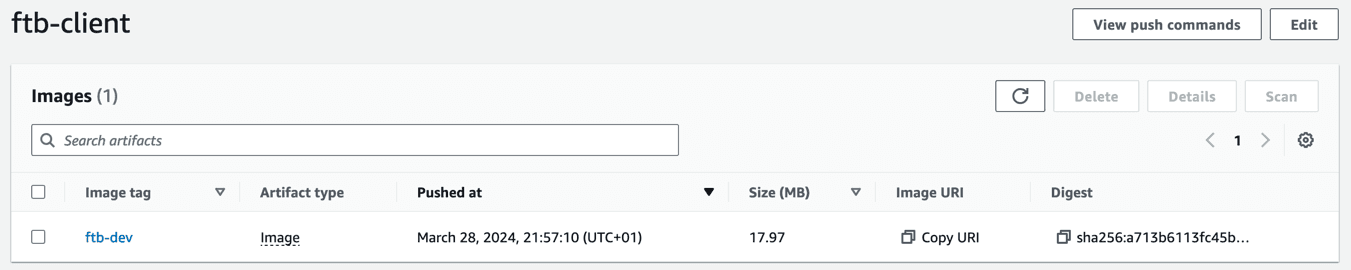

This will create a new private repository in AWS ECR:

Elastic Container Service

Finally, we will start on the ECS! To have a functional setup with Fargate we need an ECS cluster (which manages our instances / tasks), task role, task execution role, task definition, and the ECS service.

// Start making the ECS

// Security group for service allowing communication on HTTP port

resource "aws_security_group" "ecs_client_service_sg" {

name = "fargate-terraform-blog-ECS-Service-sg"

description = "Allow incoming http traffic"

vpc_id = var.vpc_id

ingress {

from_port = 80

to_port = 80

protocol = "TCP"

cidr_blocks = ["0.0.0.0/0"]

ipv6_cidr_blocks = ["::/0"]

}

egress {

from_port = 80

to_port = 80

protocol = "TCP"

cidr_blocks = ["0.0.0.0/0"]

ipv6_cidr_blocks = ["::/0"]

}

ingress {

from_port = 443

to_port = 443

protocol = "TCP"

cidr_blocks = ["0.0.0.0/0"]

ipv6_cidr_blocks = ["::/0"]

}

egress {

from_port = 443

to_port = 443

protocol = "TCP"

cidr_blocks = ["0.0.0.0/0"]

ipv6_cidr_blocks = ["::/0"]

}

}

// Create ECS cluster

resource "aws_ecs_cluster" "client_cluster" {

name = "ftb-Client-cluster"

}

// Create a cloudwatch log group our containers can log into

resource "aws_cloudwatch_log_group" "yada" {

name = "/ecs/ftb/client"

retention_in_days = 7

}

// Create the task execution role

resource "aws_iam_role" "ecs_task_execution_role" {

name = "ftbClientECSTaskExecutionRole"

assume_role_policy = jsonencode({

Version = "2012-10-17",

Statement = [

// The underlying statement allows the ecs tasks to assume this role. Otherwise AWS will block it by default

{

Action = "sts:AssumeRole",

Effect = "Allow",

Principal = {

Service = "ecs-tasks.amazonaws.com"

}

}

]

})

}

resource "aws_iam_policy" "custom_policy_task_execution_role" {

name = "ftbClientECSTaskExecutionRole-policy"

description = "Custom IAM policy for Fargate client task execution role"

policy = jsonencode({

Version = "2012-10-17",

Statement = [

{

Action = ["ecs:RunTask", "ecs:StopTask", "ecs:DescribeTasks", "ssm:GetParameters", "ecr:GetAuthorizationToken", "ecr:BatchCheckLayerAvailability", "ecr:GetDownloadUrlForLayer", "ecr:BatchGetImage"],

Effect = "Allow",

Resource = "*",

},

{

Action = ["logs:CreateLogGroup", "logs:CreateLogStream", "logs:PutLogEvents"],

Effect = "Allow",

Resource = "arn:aws:logs:*:*:*",

}

],

})

}

resource "aws_iam_policy" "custom_policy_task_role" {

name = "ftbClientECSTaskRole-policy"

description = "Custom IAM policy for Fargate client task role"

policy = jsonencode({

Version = "2012-10-17",

Statement = [

{

Action = ["ecs:RunTask", "ecs:StopTask", "ecs:DescribeTasks", "ssm:GetParameters", "ecr:GetAuthorizationToken", "ecr:BatchCheckLayerAvailability", "ecr:GetDownloadUrlForLayer", "ecr:BatchGetImage"],

Effect = "Allow",

Resource = "*",

},

{

Action = ["logs:CreateLogGroup", "logs:CreateLogStream", "logs:PutLogEvents"],

Effect = "Allow",

Resource = "arn:aws:logs:*:*:*",

}

],

})

}

resource "aws_iam_role_policy_attachment" "custom_policy_attachment_task_execution_role" {

policy_arn = aws_iam_policy.custom_policy_task_execution_role.arn

role = aws_iam_role.ecs_task_execution_role.name

}

resource "aws_iam_role" "ecs_task_role" {

name = "ftbClientECSTaskRole"

assume_role_policy = jsonencode({

Version = "2012-10-17",

Statement = [

{

Action = "sts:AssumeRole",

Effect = "Allow",

Principal = {

Service = "ecs-tasks.amazonaws.com"

}

}

]

})

}

resource "aws_iam_role_policy_attachment" "custom_policy_attachment_task_role" {

policy_arn = aws_iam_policy.custom_policy_task_role.arn

role = aws_iam_role.ecs_task_role.name

}

// Important that we add the lifecycle rule to ignore all changes.

// This will prevent a new task definition update every time we deploy our infrastructure

// This is needed since our Terraform state tracks the task definition revision number, which will change whenever we push

resource "aws_ecs_task_definition" "client_task_definition" {

container_definitions = jsonencode([{

essential = true,

image = aws_ecr_repository.client_ecr_repository.repository_url,

name = "ftb-client-container",

logConfiguration : {

"logDriver" : "awslogs",

"options" : {

"awslogs-group" : "/ecs/ftb/client",

"awslogs-region" : "eu-west-1",

"awslogs-stream-prefix" : "/ecs/ftb/client"

}

}

portMappings = [{ containerPort = 80,

hostPort: 80, }] }])

network_mode = "awsvpc"

cpu = 256

family = "ftb-client-task-definition"

execution_role_arn = aws_iam_role.ecs_task_execution_role.arn

task_role_arn = aws_iam_role.ecs_task_role.arn

memory = 512

requires_compatibilities = ["FARGATE"]

lifecycle {

ignore_changes = all

}

}

// Create te service

resource "aws_ecs_service" "client_ecs_service" {

cluster = aws_ecs_cluster.client_cluster.name

name = "ftb-client-service"

launch_type = "FARGATE"

task_definition = aws_ecs_task_definition.client_task_definition.arn

desired_count = 1

network_configuration {

security_groups = [aws_security_group.ecs_client_service_sg.id]

subnets = split(",", var.subnet_ids)

}

load_balancer {

target_group_arn = aws_lb_target_group.alb_client_target_group.arn

container_name = "ftb-client-container"

container_port = 80

}

}

// Add an auto scaling rule.

resource "aws_appautoscaling_target" "ecs_service_target" {

max_capacity = 10

min_capacity = 1

resource_id = "service/${aws_ecs_cluster.client_cluster.name}/${aws_ecs_service.client_ecs_service.name}"

scalable_dimension = "ecs:service:DesiredCount"

service_namespace = "ecs"

}

resource "aws_appautoscaling_policy" "ecs_service_scaling_policy" {

name = "ftb-ecs-service-scaling-policy"

policy_type = "TargetTrackingScaling"

resource_id = aws_appautoscaling_target.ecs_service_target.resource_id

scalable_dimension = aws_appautoscaling_target.ecs_service_target.scalable_dimension

service_namespace = "ecs"

target_tracking_scaling_policy_configuration {

predefined_metric_specification {

predefined_metric_type = "ECSServiceAverageCPUUtilization"

}

scale_in_cooldown = 60

scale_out_cooldown = 60

target_value = 70

}

}

It is important to add a lifecycle rule to the task definition, otherwise you will have changes every time you deploy your infrastructure!

Another important part of the above Terraform code is linking the ECS service to the balancer. You will not see this effect immediately but once you get ECS containers running they will automatically register themselves to the assigned ALB.

Client application

Now that our infrastructure is set up and ready to receive our Docker image, we can think about how we can deliver it. As mentioned before, our Client application is a default NGNIX page.

I prepared 2 solutions to achieve our goal of uploading our image and updating the task definition. You can find both in the Client repository.

We can simply create a GitHub action that automates most of the work for us or, for when you're using GitLab runners, you can execute scripts to deploy your images.

GitHub Actions

This is probably the easiest way. Adding a new workflow in .github/workflows will create an action that will trigger whenever you push to one of your environment branches.

name: Release Build + Push to ECR

on:

push:

branches: [template] # TODO: Enter branches that trigger CICD

jobs:

build:

name: Build Image

runs-on: ubuntu-latest

steps:

- name: Check out code

uses: actions/checkout@v2

- name: Extract branch name # Helper step to extract current branch name

shell: bash

run: echo "branch=${GITHUB_HEAD_REF:-${GITHUB_REF#refs/heads/}}" >> $GITHUB_OUTPUT

id: extract_branch

- name: Configure AWS credentials

uses: aws-actions/configure-aws-credentials@v1

with:

aws-access-key-id: ${{ secrets.AWS_ACCESS_KEY_ID }} # TODO: Add secret to GitHub secrets

aws-secret-access-key: ${{ secrets.AWS_SECRET_ACCESS_KEY }} # TODO: Add secret to GitHub secrets

aws-region: eu-west-1

- name: Download existing task definition

run: | # TODO: Enter task definition name, defined in Terraform

aws ecs describe-task-definition --task-definition ftb-client-task-definition-${{ steps.extract_branch.outputs.branch }} --query taskDefinition > task-definition.json

- name: Login to Amazon ECR

id: login-ecr

uses: aws-actions/amazon-ecr-login@v1

- name: Build, tag, and push image to Amazon ECR

id: build-image

env:

ECR_REGISTRY: ${{ steps.login-ecr.outputs.registry }}

ECR_REPOSITORY: ${{ secrets.ECR_REPOSITORY }}-${{ steps.extract_branch.outputs.branch }}

IMAGE_TAG: ftb-${{ github.sha }} # TODO: Add prefix for current project

run: |

docker build --build-arg env=${{ steps.extract_branch.outputs.branch }} -t $ECR_REGISTRY/$ECR_REPOSITORY:$IMAGE_TAG .

docker push $ECR_REGISTRY/$ECR_REPOSITORY:$IMAGE_TAG

echo "image=$ECR_REGISTRY/$ECR_REPOSITORY:$IMAGE_TAG" >> $GITHUB_OUTPUT

- name: Fill in the new image ID in the Amazon ECS task definition

id: task-def

uses: aws-actions/amazon-ecs-render-task-definition@v1

with:

task-definition: task-definition.json

container-name: ftb-client-container

image: ${{ steps.build-image.outputs.image }}

- name: Deploy Amazon ECS task definition # Change the current task definition to the new one using our updated task definition. This updated task definition points to our latest image in ECR.

uses: aws-actions/amazon-ecs-deploy-task-definition@v1

with:

task-definition: ${{ steps.task-def.outputs.task-definition }}

service: ftb-client-service # TODO: Enter service name, defined in Terraform

cluster: ftb-client-container # TODO: Enter cluster name, defined in Terraform

wait-for-service-stability: true

- name: Log out of Amazon ECR

if: always()

run: docker logout ${{ steps.login-ecr.outputs.registry }}The interesting thing here is that we're doing more than just uploading a new docker image, we're also updating our task definition so that ECS knows where to look for the newest version of our application.

Once you've set all parameters necessary such as your action triggers and secrets, the script will build and push your dockerfile to ECR and update ECS.

gitlab-ci

If you, for some reason, need to take a more manual approach for updating ECS task definitions, well... here is a template for you:

apk add aws-cli

apk add jq # Used to retrieve the revision number from AWS JSON body

aws --version # Check if AWS is installed

chmod +x ./generate-task-definition.sh # generate-task-definition allows us to make a JSON based on environment variables. Make the script executable

sh ./generate-task-definition.sh

cat task_definition.json # Check if the JSON is created

aws ecr get-login-password --region eu-west-1 | docker login --username AWS --password-stdin $ecr_uri # get AWS credentials

docker build -t ftb-client . # Build the docker

docker tag ftb-client:latest $ecr_uri/ftb-client:ftb-$CI_COMMIT_SHORT_SHA # Tag the docker with the commit hash

docker push $ecr_uri/ftb-client:ftb-$CI_COMMIT_SHORT_SHA # Upload the image to our AWS ECR

REGISTERED_TASK=$(aws ecs register-task-definition --cli-input-json file://task_definition.json) # Create a new task definition in ECR that uses the latest git hash as image. The response is a JSON body containing the revision number

LATEST_REVISION=$(echo "$REGISTERED_TASK" | jq -r '.taskDefinition.revision') # Extract the revision number from the response above

echo $LATEST_REVISION # Check

aws ecs update-service --cluster ftb-Client-cluster --service ftb-client-service --task-definition ftb-client-task-definition:$LATEST_REVISION --platform-version 1.4.0 # Update the current service to use the new revision numberLet's see what's happening here!

- When we deploy a new version of our Docker image, we create a new task-definition with an updated revision number. We create this definition by attaching a JSON file to the 'aws ecs register-task-definition' command. This JSON file is a little bit unique though. Every time we push a new image to the ECR, we postfix the shortened commit hash for identification purposes. I use a script to generate the file to make it easier to enter all parameters.

#!/bin/bash

# Create a JSON task definition file

cat <<EOF > task_definition.json

{

"containerDefinitions": [

{

"essential": true,

"image": "780718310965.dkr.ecr.eu-west-1.amazonaws.com/ftb-client:ftb-$CI_COMMIT_SHORT_SHA",

"name": "ftb-client-container",

"logConfiguration" : {

"logDriver" : "awslogs",

"options" : {

"awslogs-group" : "/ecs/ftb/client",

"awslogs-region" : "eu-west-1",

"awslogs-stream-prefix" : "/ecs/ftb/client"

}

},

"portMappings": [{"containerPort": 80,

"hostPort": 80}]

}

],

"networkMode": "awsvpc",

"cpu": "256",

"family": "ftb-client-task-definition",

"taskRoleArn": "arn:aws:iam::780718310965:role/ftbClientECSTaskRole",

"executionRoleArn": "arn:aws:iam::780718310965:role/ftbClientECSTaskExecutionRole",

"memory": "512",

"requiresCompatibilities": ["FARGATE"]

}

EOF- docker build -t ftb-client . && docker tag ftb-client:latest $ecr_uri/ftb-client:ftb-$CI_COMMIT_SHORT_SHA && docker push $ecr_uri/ftb-client:ftb-$CI_COMMIT_SHORT_SHA

Here we build the Docker image, tag it with the current commit hash that triggered the pipeline and push it to our previously created ECR repository.

- REGISTERED_TASK=$(aws ecs register-task-definition - cli-input-json file://task_definition.json)

Now that we have pushed the image to our ECR repository you should be able to see it! The next step is to actually tell our ECS service to use it. First of all we register the new task definition which we created earlier. Remember, in this task definition we point to the commit hash.

- aws ecs update-service … - task-definition ftb-client-task-definition:$LATEST_REVISION

Finally, we update our ECS service to use our latest task definition. When we trigger this command, Fargate will automatically create a new container with the newest image from our ECR repository. Once that new container is running it will drain the previous container.

End Result

If we run the Infrastructure and Client CI/CD pipelines you can visit your ALB url and behold your NGNIX page which is running in Fargate. 🎉